Forward Looking: AI Assistant

I can never resist a deep dive into ue5-main. Especially when a new feature appears. I've heard buzz about it on X and now it's time to pull back the curtain and share a breakdown of the AIAssistant.

AIAssistant: A New Module

Recent commits in UnrealEngine/Engine/Plugins/Experimental/ grabbed my attention. The key takeaway here is its location: within the Experimental plugins folder. This means it's under active development, subject to change, not yet ready for prime time. But it's coming, and it's exciting.

Within the AIAssistant plugin, we find its core module, also named AIAssistant.

First Impressions: A Web-Powered, Editor-Integrated Partner

Upon inspecting AIAssistant.uplugin, it's clear this is an Editor type plugin. It relies on PythonScriptPlugin and EditorScriptingUtilities, hinting at what is to come.

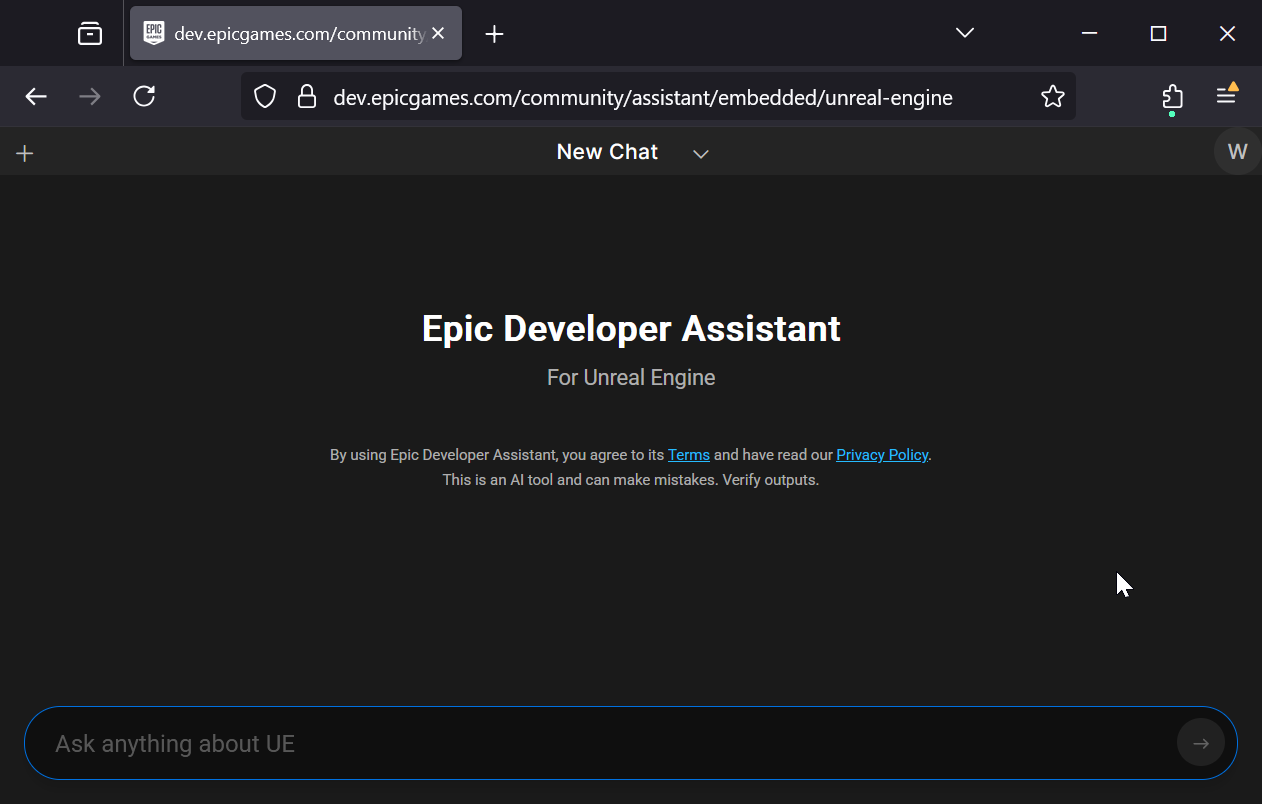

The UI classes seem focused on providing an embedded web browser to interact with a ChatGPT-like interface. In FAIAssistantConfig, I found the DefaultMainUrl pointing us at https://dev.epicgames.com/community/assistant/embedded/unreal-engine and promptly decided to try it out. (pun intended)

Accessing it should be more useful once inside the editor.

I tried it to no avail. In-editor, the chat panel is still web-based but integrated in an editor window, similar to how you can browse the fab store.

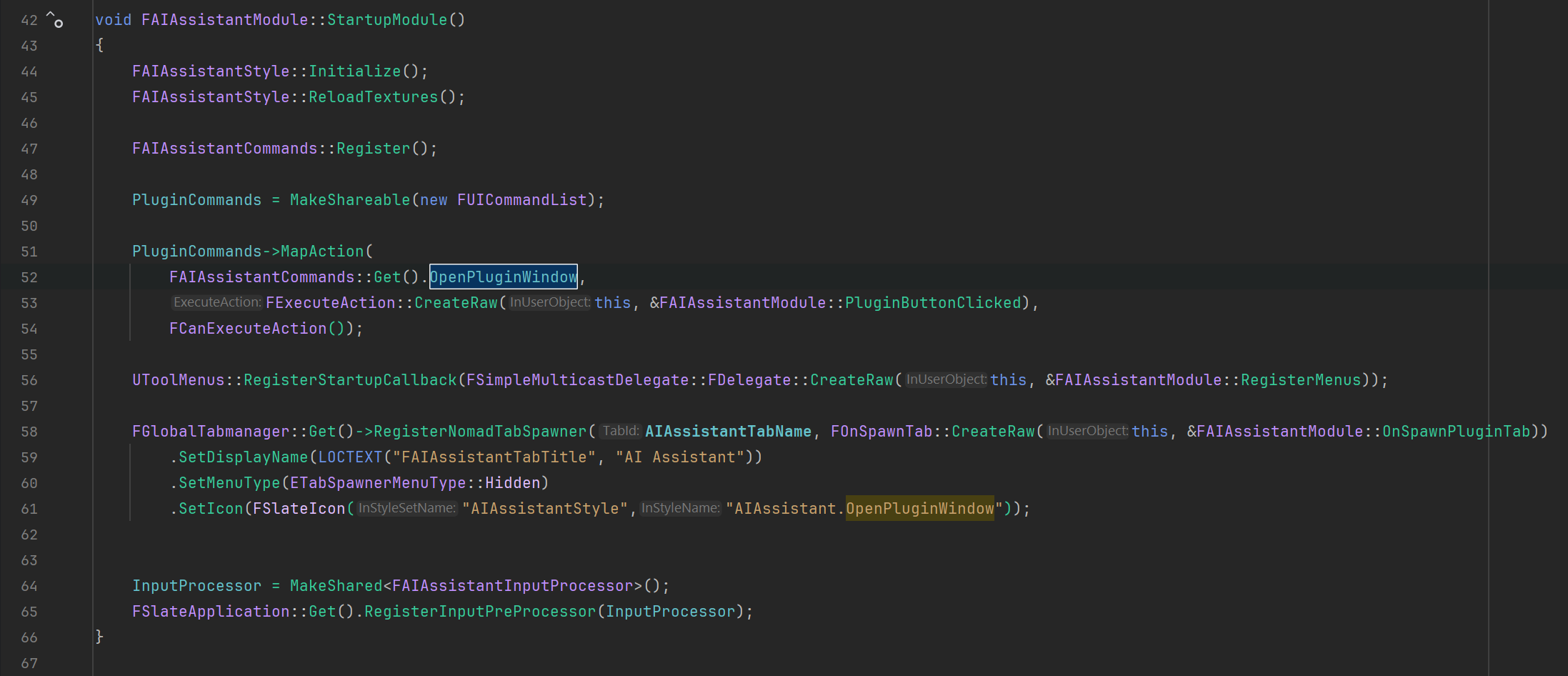

During the module startup phase, I can tell the plugin should be adding some buttons to our editor to experiment with. Should be fun to test out as soon as my engine finishes compiling.

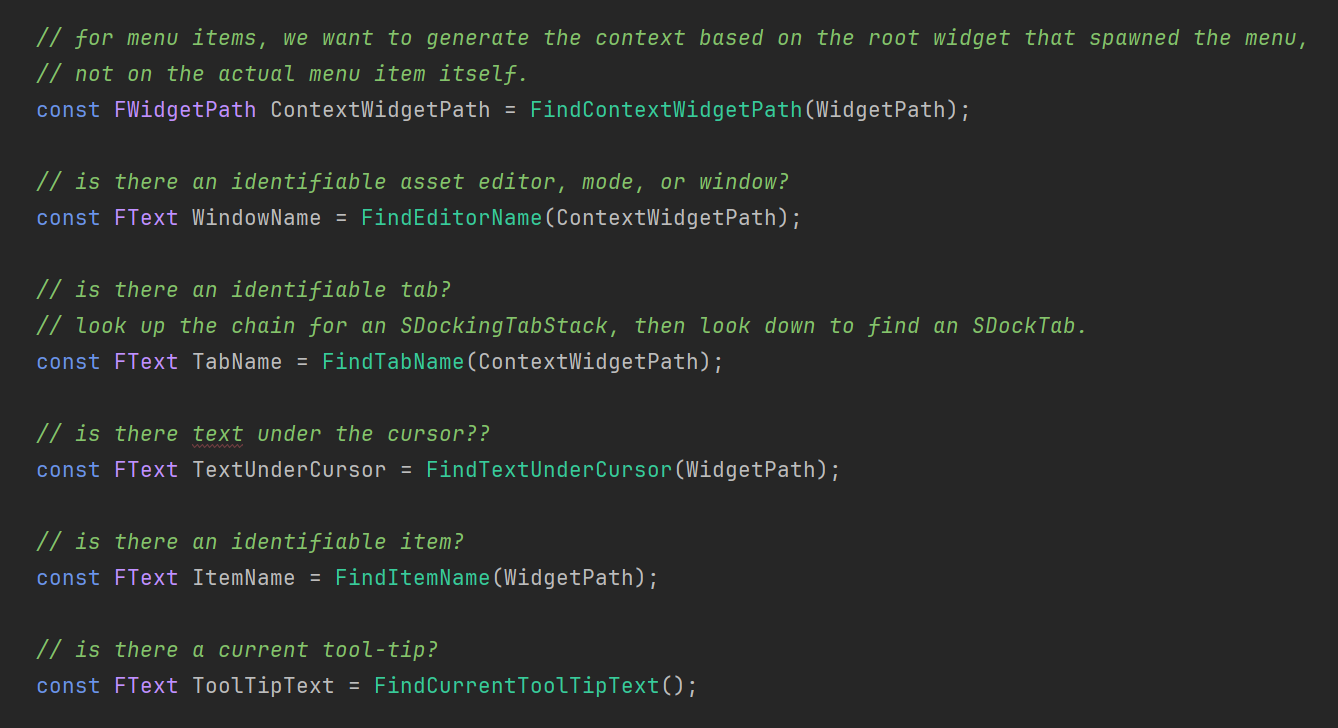

While we wait, I'll take a look at that FAIAssistantInputProcessor as it sounds interesting. Opening up the file shows me AIAssistant’s input processor:

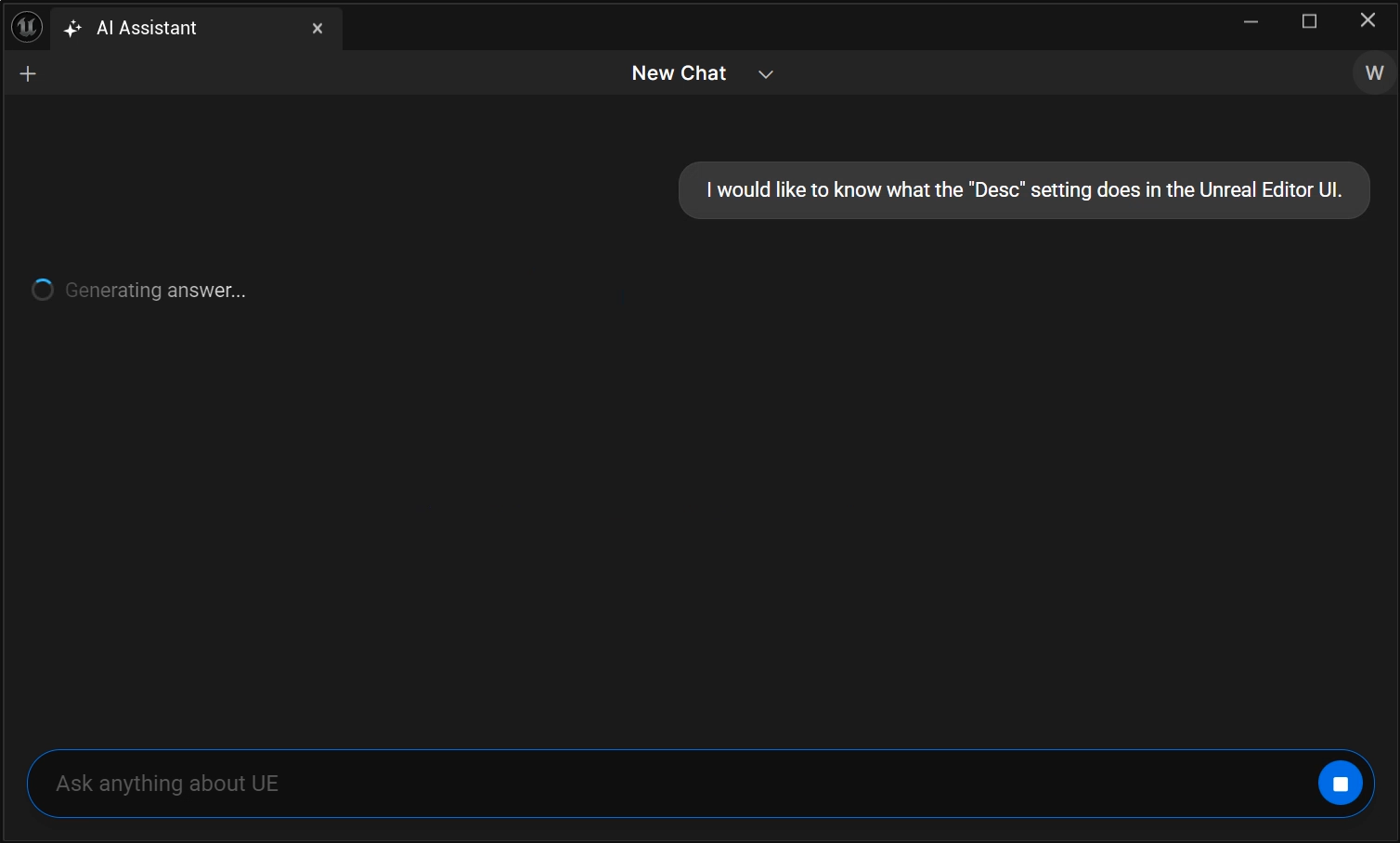

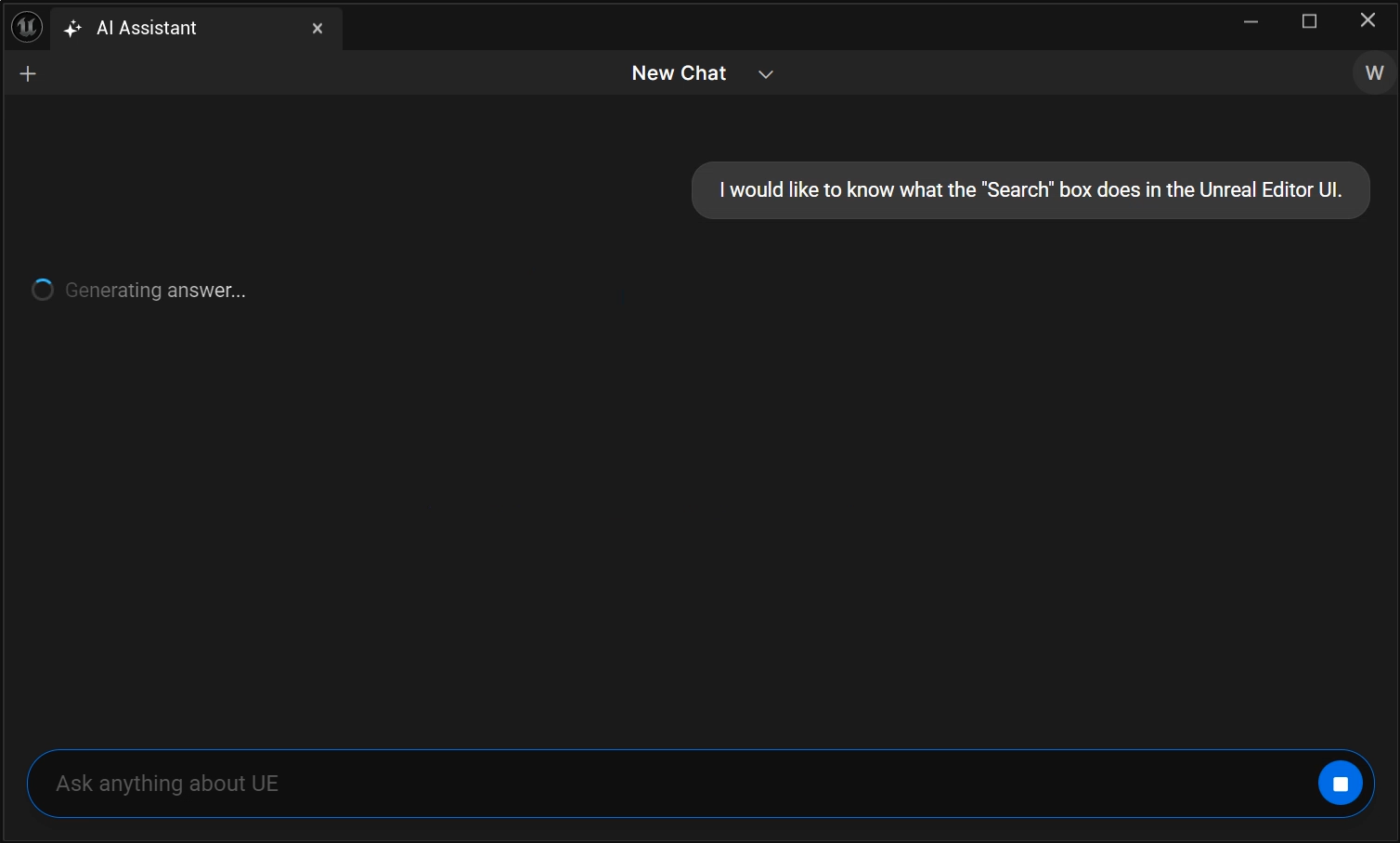

- Introduces "Explain this UI" allows the use of Shift+F1 for querying the element under your cursor.

- Captures rich editor context automatically (Level Editor or specific Asset Editor, current tab/panel, active mode/tool).

- Identifies the exact control under the cursor (buttons, toolbar buttons, menu items, search fields, details properties, graph nodes), using labels or tooltips when needed.

- Reads visible tooltip text and the first selected actor/object class from the Details panel for extra context.

- Crafts a concise prompt explaining what the item does and, if clickable, what happens when you click it (including notable side effects).

- Sends the prompt plus consolidated, whitespace-cleaned context to a new AI chat thread for a quick, conversational answer.

Well that's definitely interesting. This is the kind of magical, next-gen, no-friction workflow I live for and try to embody in my own creations all the time such as my plugins and assets on fab. Go check them out! (Take that Reddit with your "no self promotion" bullshit, this is MY platform.)

Anyways, enough of the guesswork: the engine has started.

Hands On: More like hands off the keyboard because of AI

It's done, we're all finished. There's no more jobs in the industry starting now. They're here to replace us. We're all doomed.

Source code is one thing. I want to take this tool for a spin and have but one question: does it work for people not (yet) employed by Epic Games?

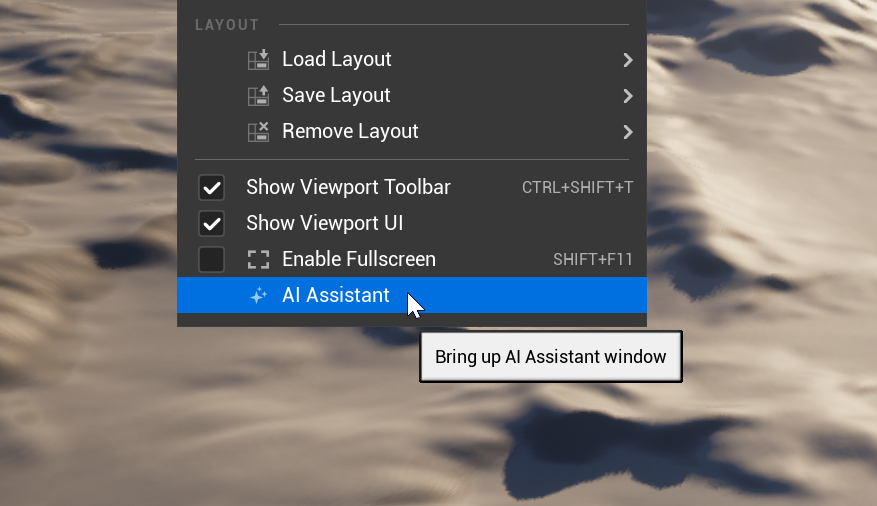

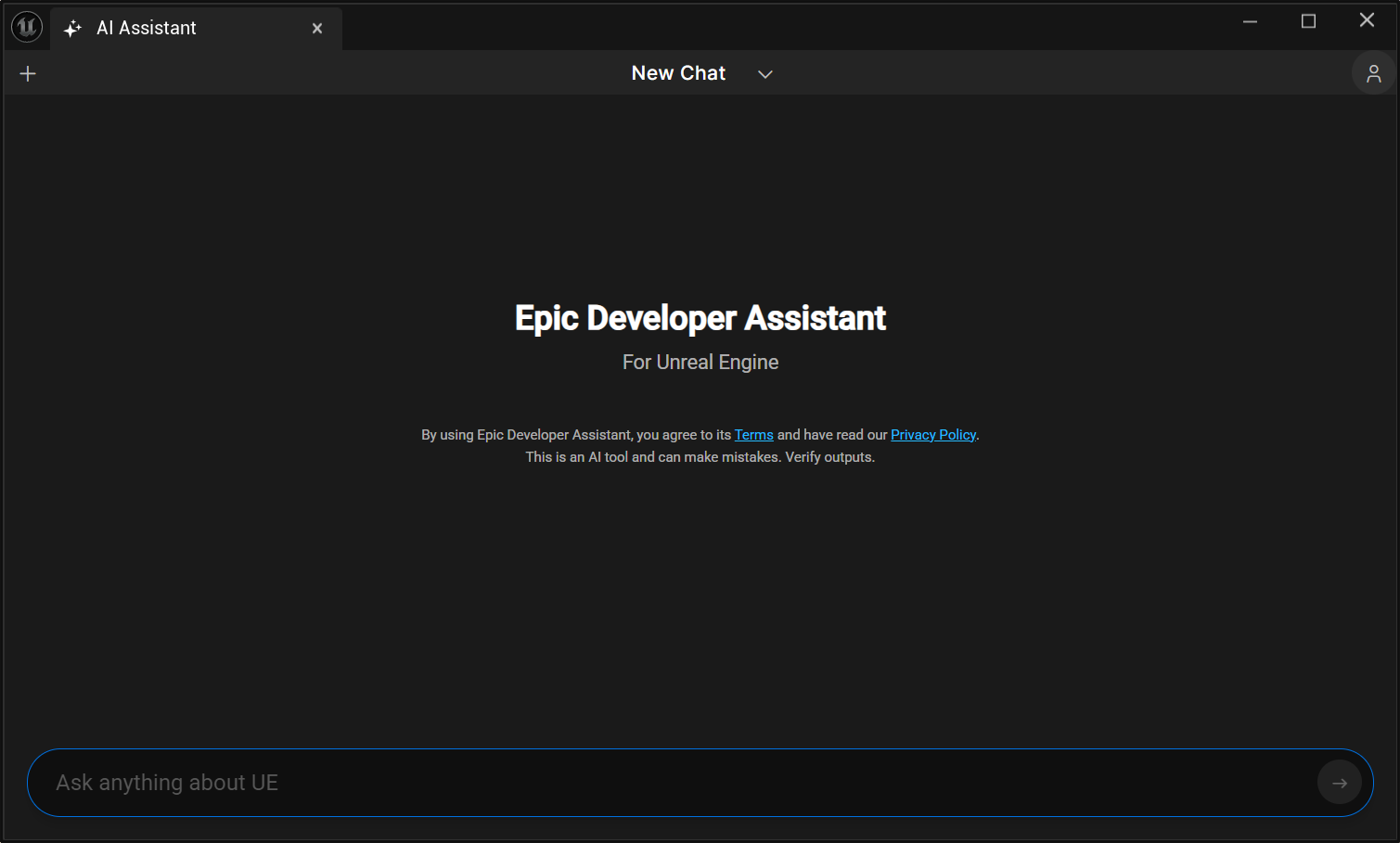

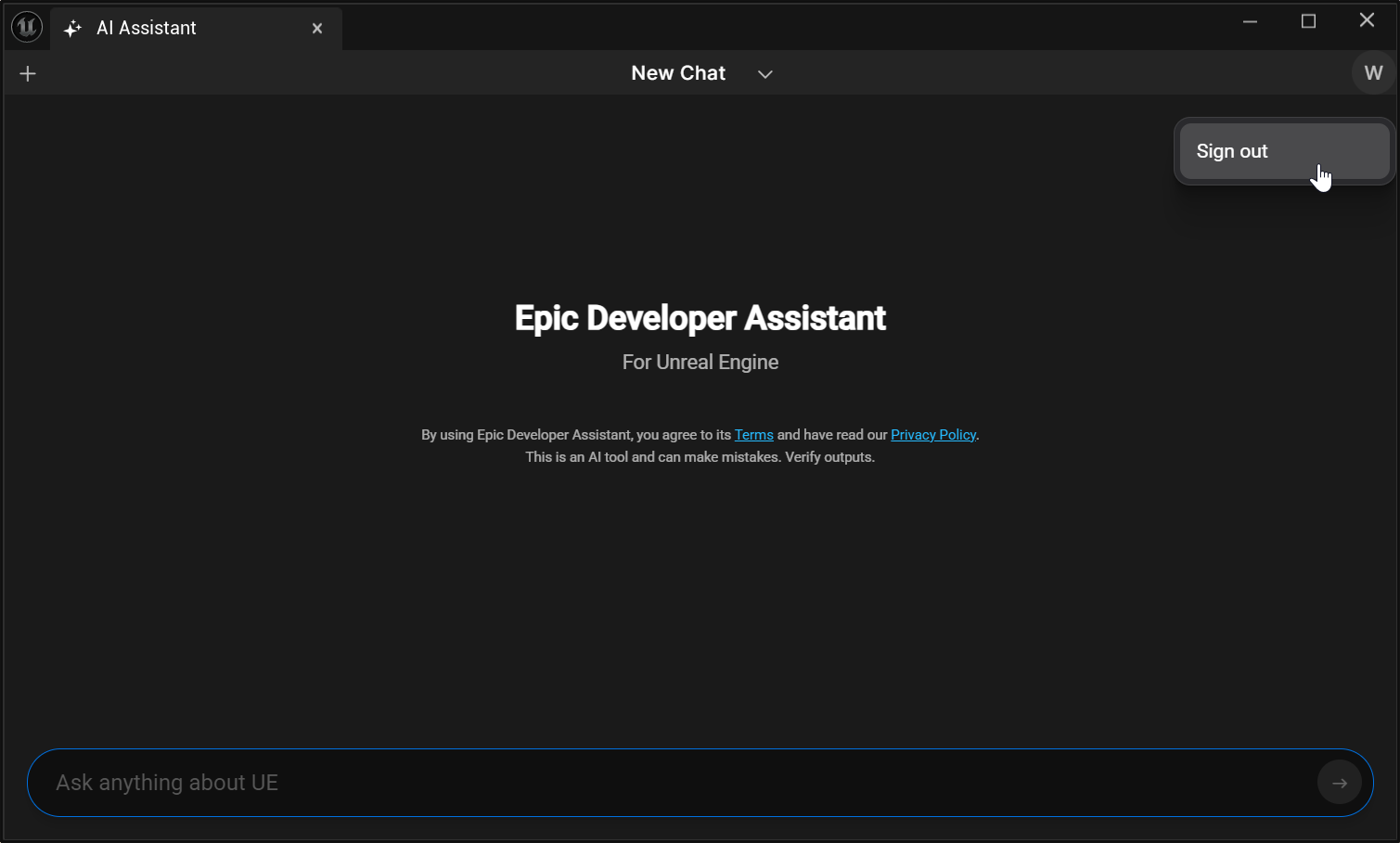

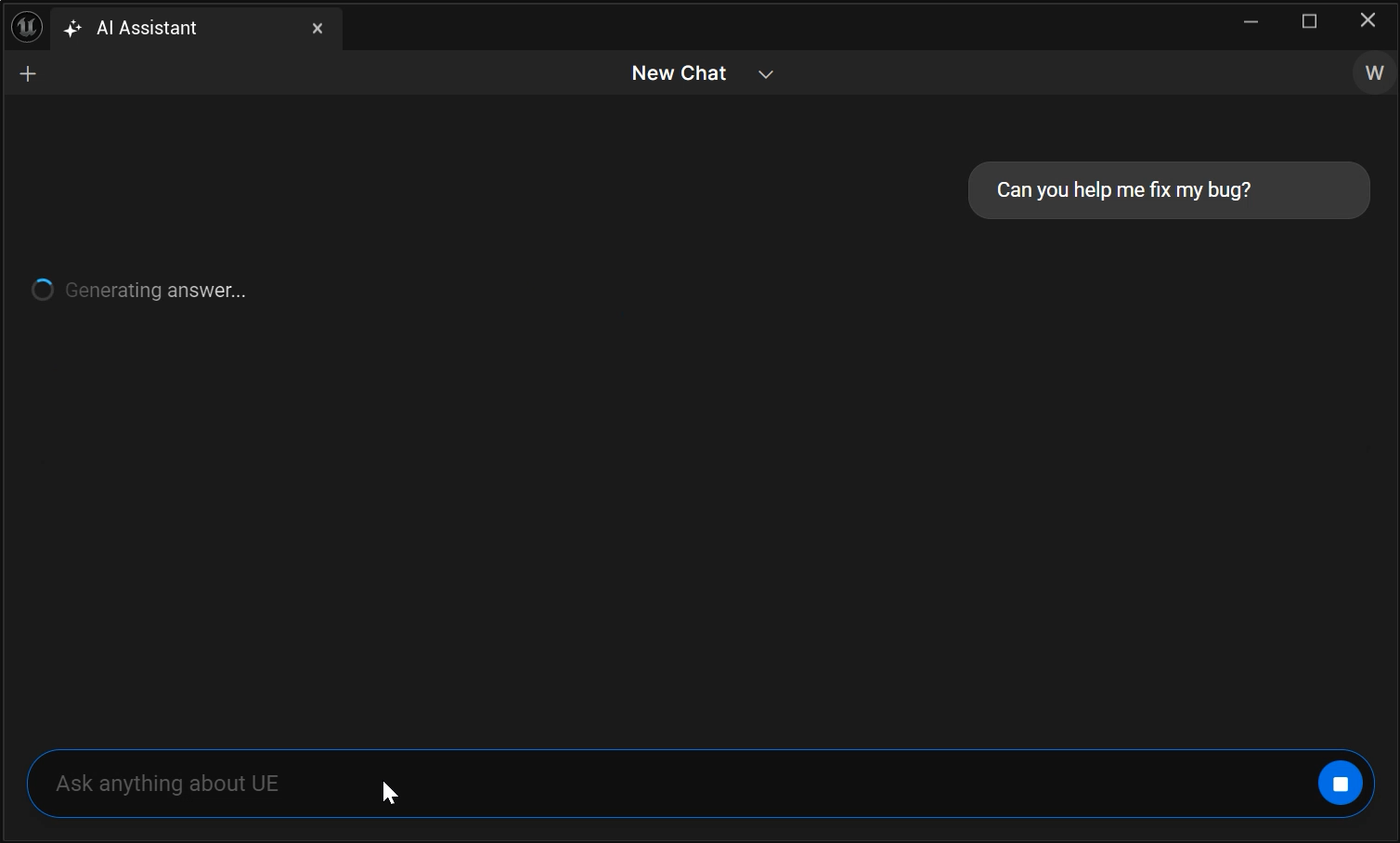

Pressing the button opens up something we're used to by this point.

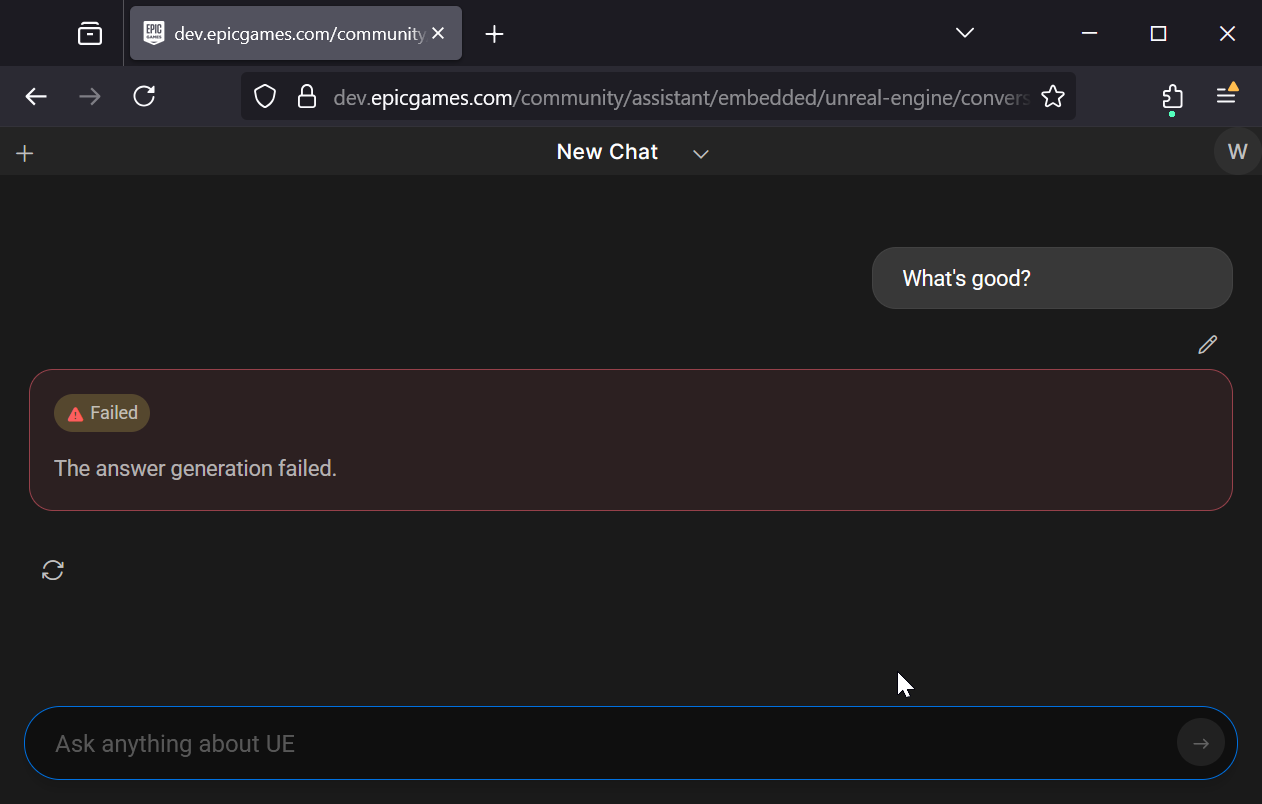

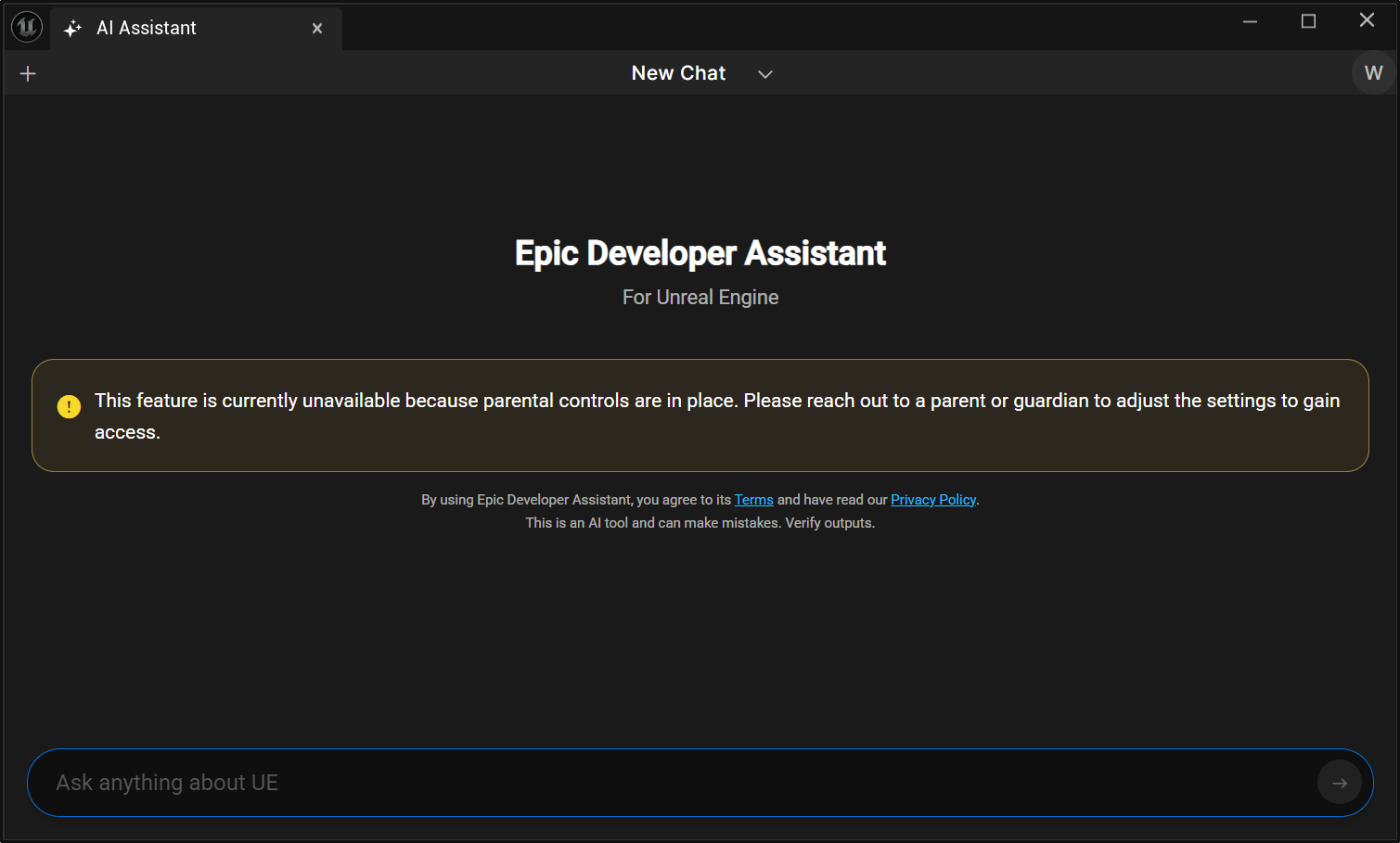

But it pains me to say this: It's experimental and not yet enabled for outsiders like us. I tried the service, no reply. I then tried logging in, but that didn't help either. My Epic Games account does not have permission to make API calls.

Eventually I even ran into this:

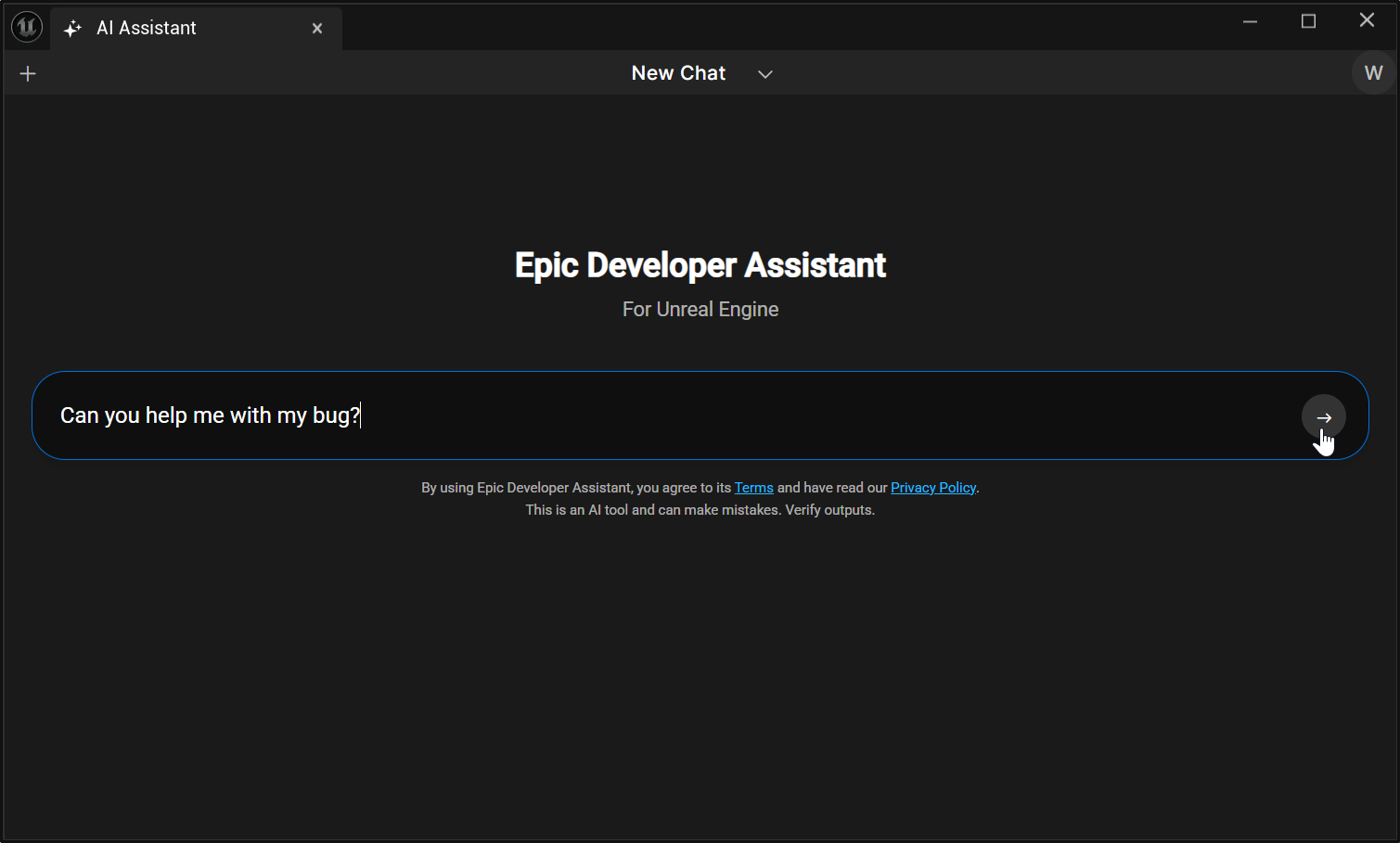

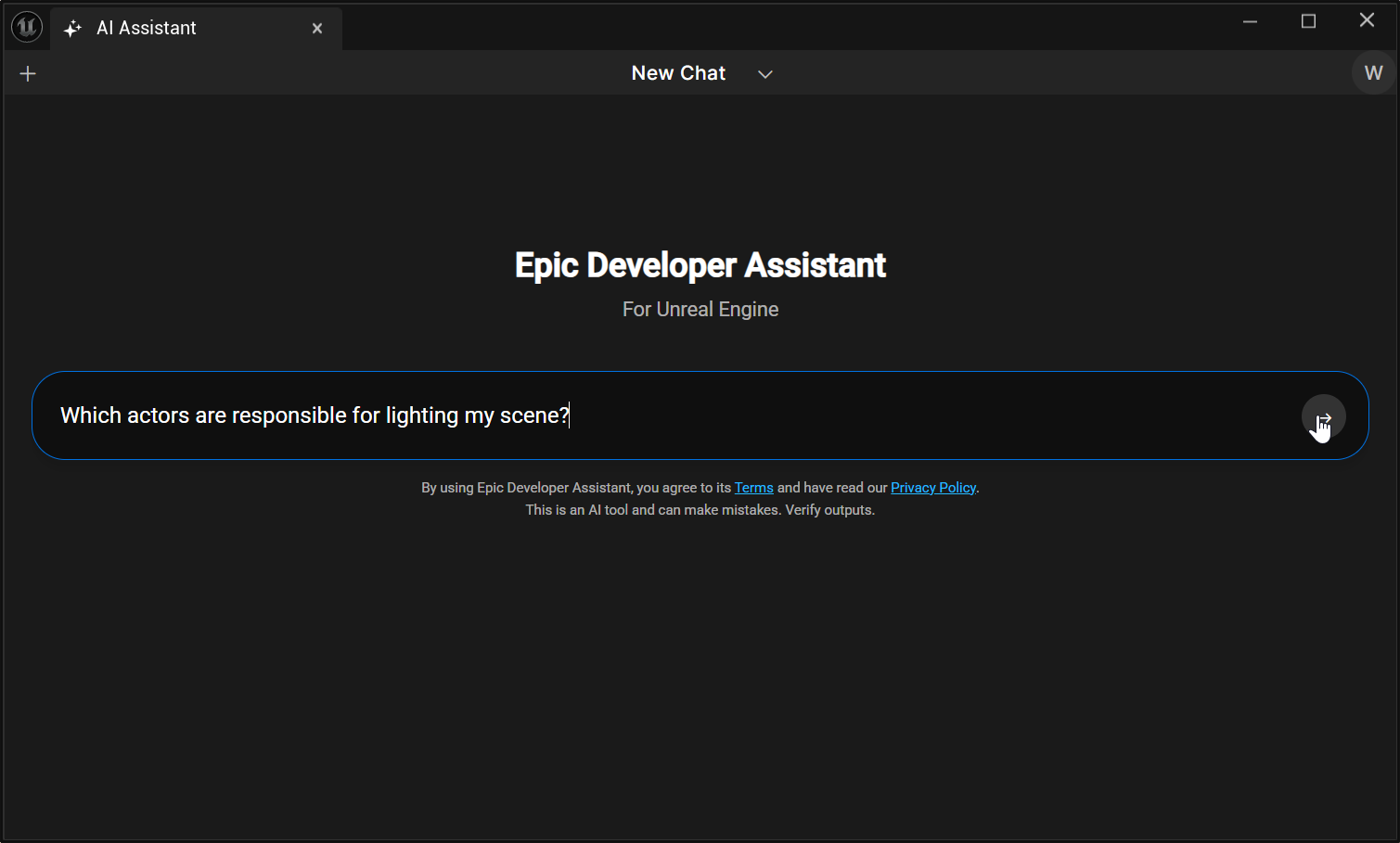

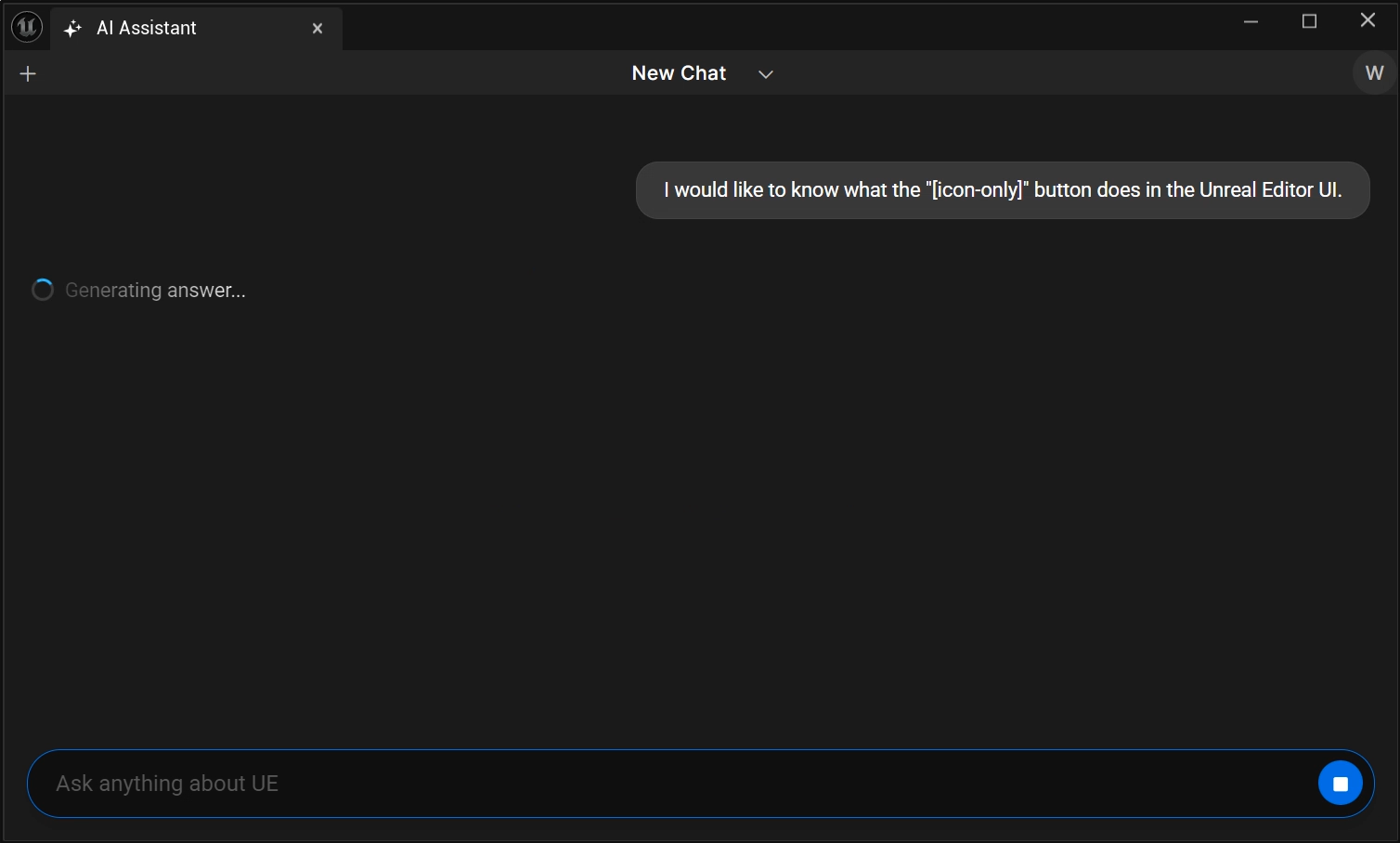

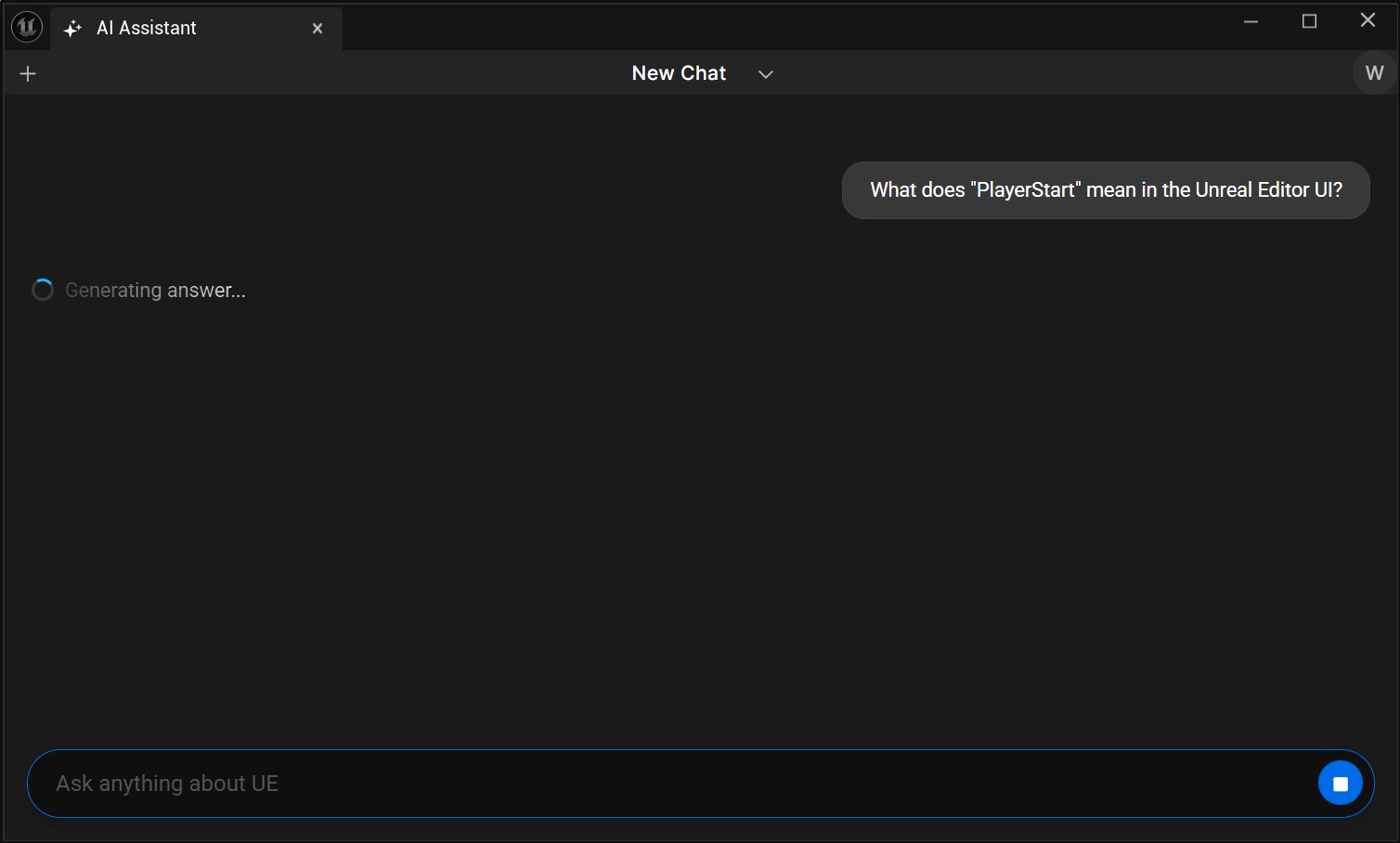

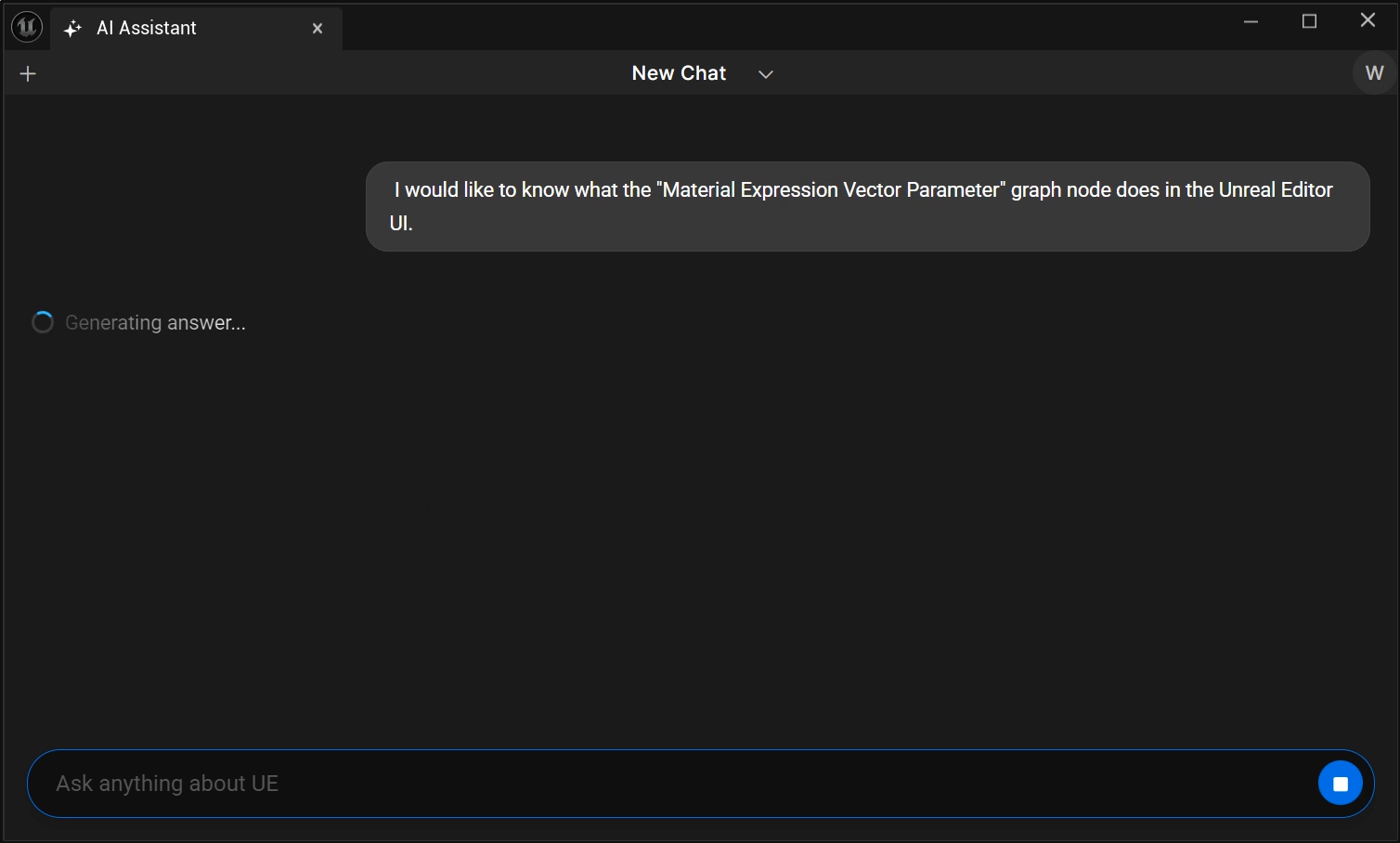

I noticed, each time after desperately asking about UE features, I got a single glimpse of the chat layout before it disappeared and took a screenshot.

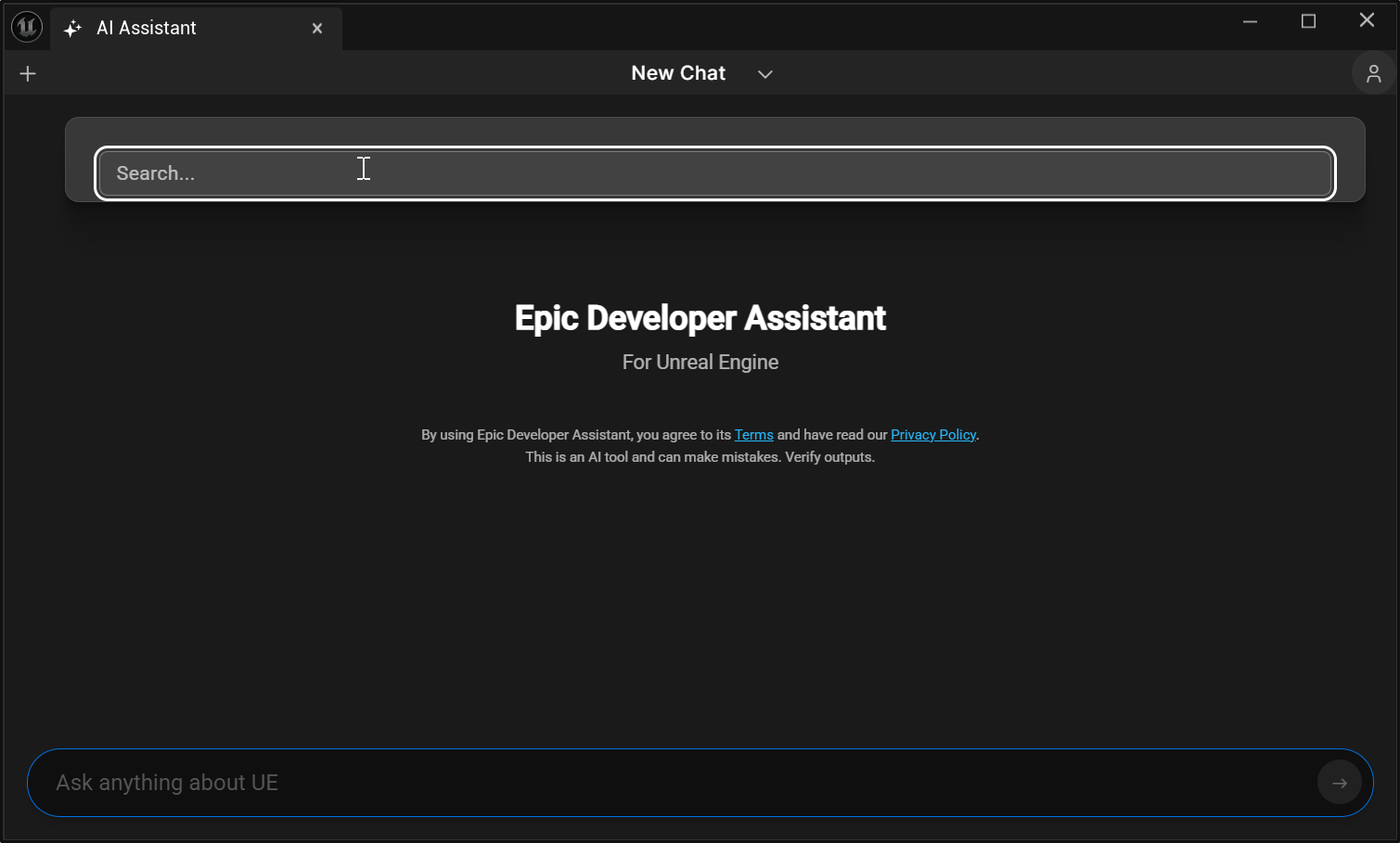

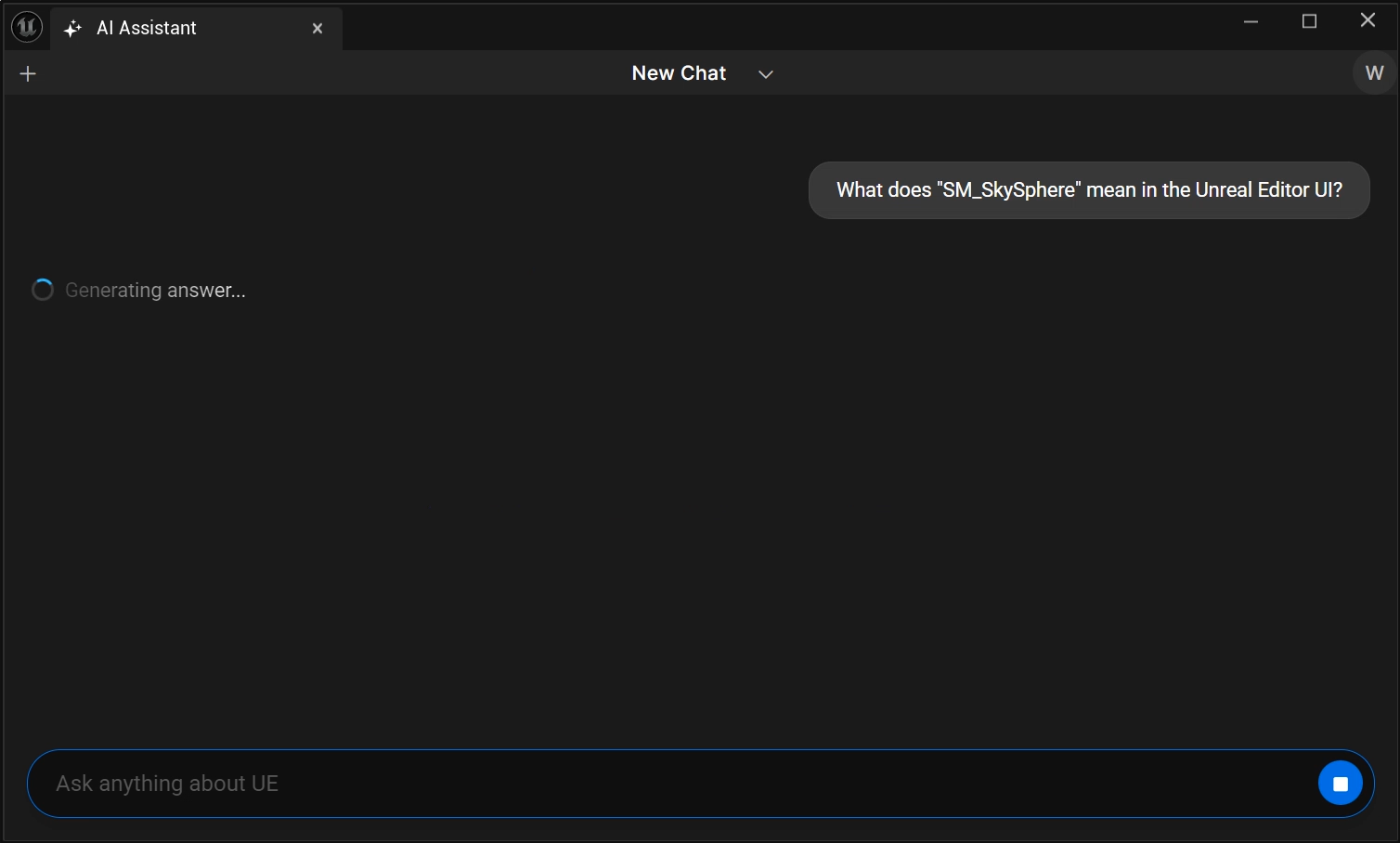

Continuing: don't we have a keyboard shortcut according to the source code? Let's try it. As I select objects in unreal engine and spam Ctrl-F1, it creates prompts for us according to the selected actors, nodes, assets, etc.

We still encounter the same issue with failed API calls and get no replies. But we do get a an early look at how the prompts are being constructed according to the user context.

This is as close as I can get to making it look like it's working for now. For actual responses, we will have to be patient. In the meantime, we can look at the source code a little more.

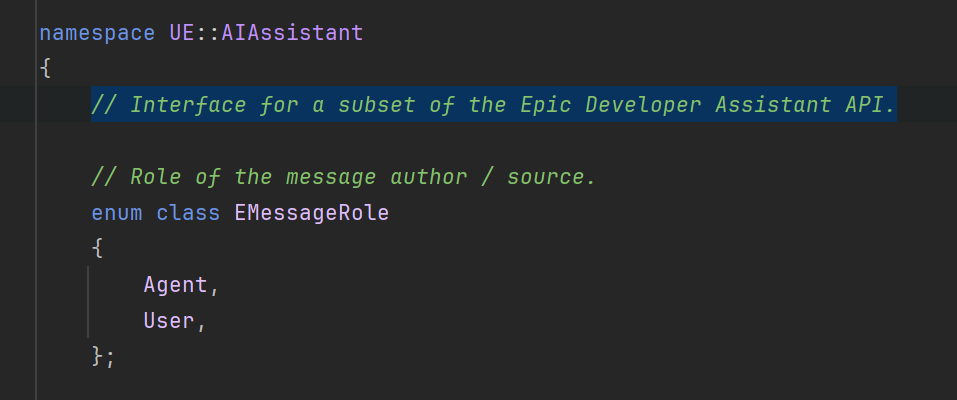

The Brains: The Epic Developer Assistant API (EDA)

Whether this Epic Developer Assistant API is an actual proprietary LLM or just the name of the web-api isn't yet clear to me.

I seriously hope they roll with a custom, purpose-built AI tailored specifically for Unreal Engine development. That would be huge; it'd means the AI will be inherently knowledgeable about the engine's internal workings unlike any other. Or it could be a wrapper with Gemini under the hood which just about anyone could create.

A Vision: Multimodal and Tool-Enabled

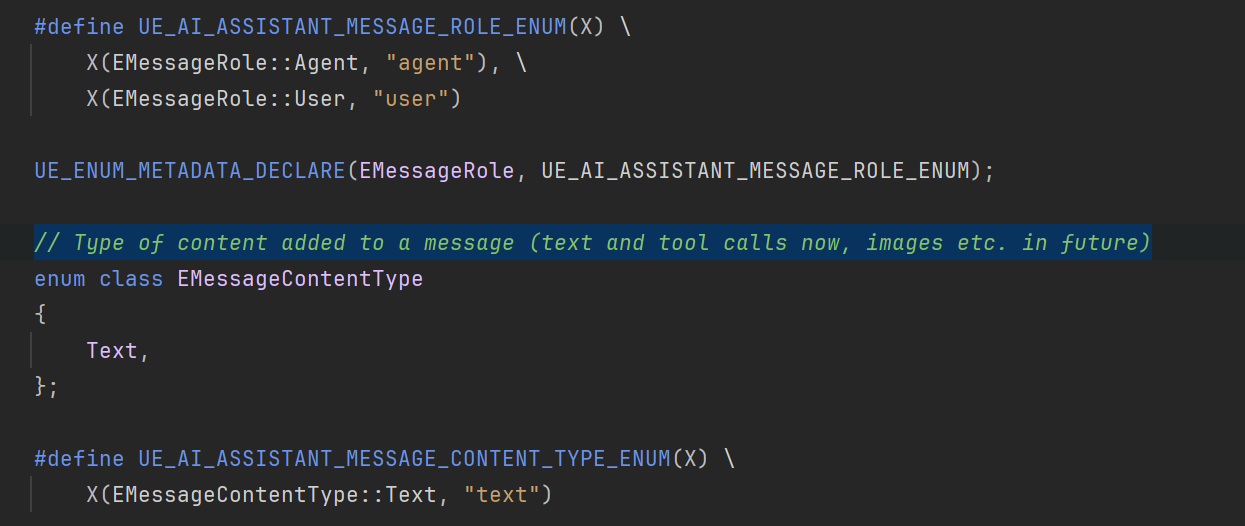

Perhaps one of the most exciting revelations comes from code comments within AIAssistantWebApi.h.

"Tool calls" suggest the AI will be able to invoke specific editor action or even external tools programmatically, in line with the module dependencies of PythonScriptPlugin and EditorScriptingUtilities.

"Images" implies a future where the AI can interpret visual information (like screenshots of your viewport) or perhaps even generate visual content. Imagine asking the AI to "make this material look more metallic" by showing it a screenshot. The potential is there.

Game Changer: Deep Contextual Awareness Through Observation

This is where the AIAssistant appears to differentiate itself from simple ChatGPT assistance. Beyond receiving custom questions and auto-generating prompts, FAIAssistantInputProcessor seems designed to be aware of your context within the editor with more info being passed under the hood.

The AIAssistant won't be limited to responding to what you type. In the background, it will actually observe what you do, anticipate your needs, and perhaps offer relevant assistance proactively.

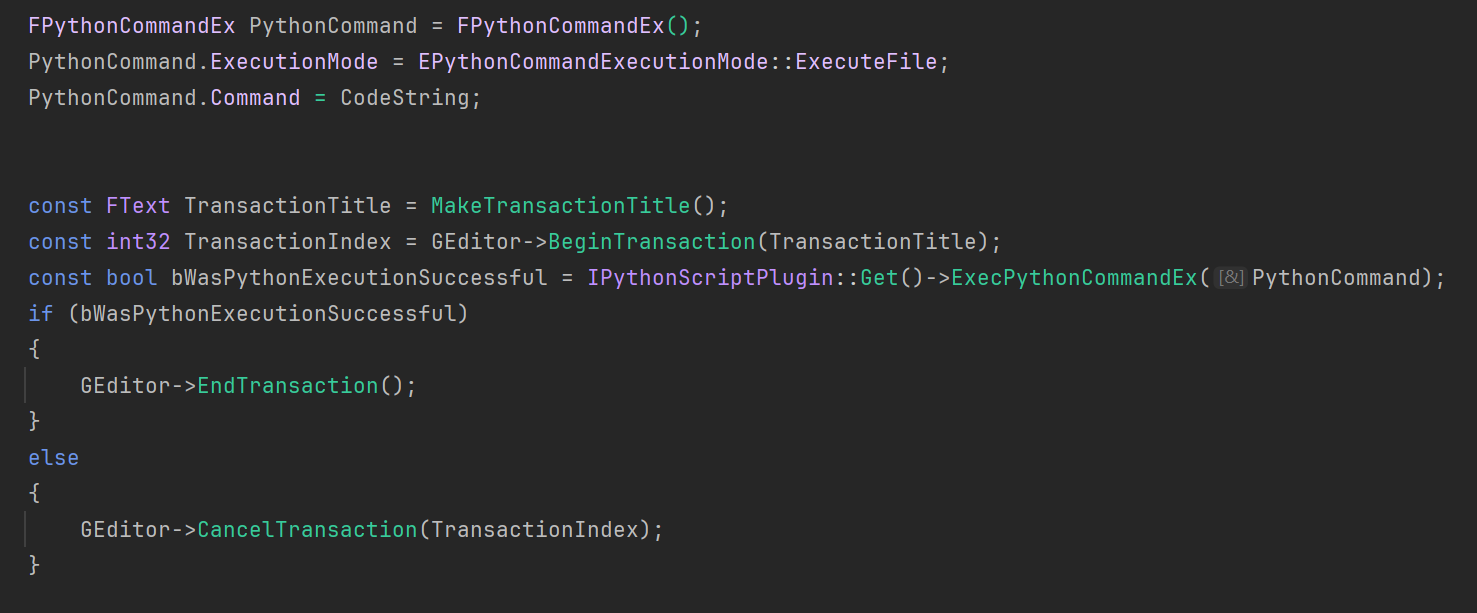

Automation: Python Scripting Bridge

A key part of the AIAssistant's power lies in its ability to act. AIAssistantPythonExecutor.h defines ExecutePythonScript(...).

This means the AI can programmatically execute arbitrary Python scripts within the editor environment. The current code indicates that these AI-driven script executions will be treated as editor transactions, with very important undo/redo functionality. I could not find any code related to editor utilities or scripting yet without python.

Big Picture: What This Means for Developers

The AIAssistant, even in its experimental state, is shaping up to be an intelligent, context-aware, and actionable partner. Imagine:

- Faster Iteration: Automating repetitive tasks with simple natural language commands.

- Reduced Friction: Getting relevant documentation, code examples, or Blueprint suggestions without leaving your current context.

- Intelligent Guidance: Receiving proactive advice based on your current work, helping you avoid common pitfalls or discover new techniques.

This feels like a pretty deep investment by Epic Games into intelligent tooling for Unreal Engine. It promises to reduce the cognitive load on developers, allowing us to focus more on creativity, ship faster and level various playing fields for new users.

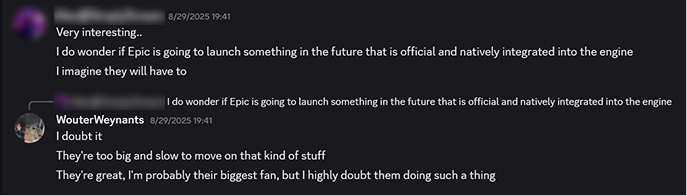

Only recently, I had just claimed Epic wouldn't do this by the way.

I'm incredibly optimistic about the future of development with the AIAssistant. I'm building similar things on the side so it's a win-win to me. Either Epic delivers and I have a sweet sweet tool at my disposal, or their assistant bombs and I can turn my own experiments into worthy competitors. I sincerely hope they outpace me. It seem the groundwork being laid is solid. I will be keeping a very close eye on this, and you can expect more updates as this feature evolves.

WW

Member discussion